For Feedbacks | Enquiries | Questions | Comments - Contact us @ innovationmerge@gmail.com

What?

- In recent years, the images shared over social media, saved snapshots in mobile are more in number.

- Companies have been stalking the web for public photos associated with people’s names that they can use to build enormous databases of faces and improve their facial recognition systems, in a sense the personal privacy is being lost.

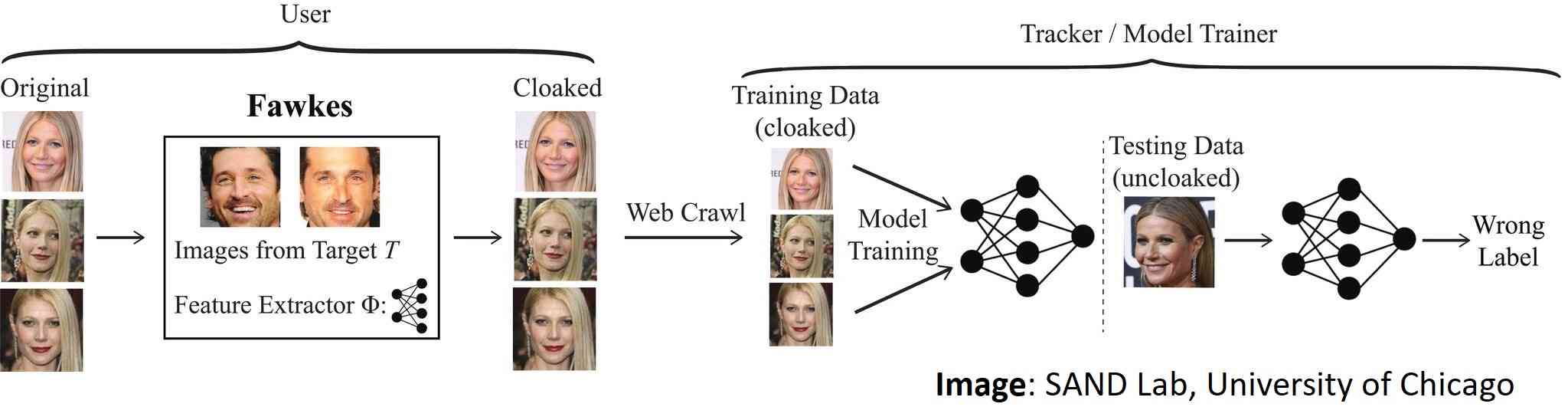

- To protect users from these kinds of facial recognition tools. The SAND Lab at University of Chicago has developed Fawkes, an algorithm and software tool running locally on your computer that gives individuals the ability to limit how their own images can be used to track them.

- At a high level, Fawkes takes your personal images and makes tiny, pixel-level changes that are invisible to the human eye, in a process what we call “Image Cloaking”. Fawkes is a way of Protecting Privacy against Unauthorized Deep Learning Models by the team of developers of “Fawkes” (named after the V for Vendetta character, Guy Fawkes) reportedly published a paper on the AI-algorithm of the tool earlier this year.

Why?

- According to article from New York times an unregulated facial recognition service that has downloaded over 3 billion photos of people from the Internet and social media and used them to build facial recognition models for millions of citizens without their knowledge or permission.

- Facial recognition tools may simply kills the concept of privacy as it can recognize anyone from its database of billion pictures. According to an article Facebook was also ordered in January to pay $550 million to settle a class-action lawsuit over its unlawful use of facial recognition technology.

- According to IBM CEO Arvind Krishna firmly opposes and will not condone uses of any facial recognition technology, including facial recognition technology offered by other vendors, for mass surveillance, racial profiling, violations of basic human rights and freedoms, or any purpose which is not consistent with our values and Principles of Trust and Transparency. He said, we believe now is the time to begin a national dialogue on whether and how facial recognition technology should be employed by domestic law enforcement agencies.

How?

- Fawkes tool converts an image or cloaks it, partly altering some of the features that facial recognition systems depend on when they construct a person’s face print.

- In a research paper, reported earlier by One Zero, the team describes “cloaking” photos of the actress Gwyneth Paltrow using the actor Patrick Dempsey’s face, so that a system learning what Ms. Paltrow looks like based on those photos would start associating her with some of the features of Mr. Dempsey’s face.

- The changes, usually not perceptible to the naked eye, would prevent the system from recognizing Ms. Paltrow when presented with a real, uncloaked photo of her.

- In testing, the researchers were able to fool facial recognition systems from Amazon, Microsoft and the Chinese tech company Megvii.

For?

- People are feeling a sense of privacy exhaustion, there are too many ways that our conventional sense of privacy is being exploited in real life and online.

- A platform like Facebook, Instagram could adapt Fawkes would prevent from scraping its users images to identify them. So the social media platforms could say give us your real photos we will cloak them, and then we will share them with the world so you will be protected.

- The cloak effect is not easily detectable by humans or machines and will not cause errors in model training. However, when someone tries to identify you by presenting an unaltered, uncloaked image of you (e.g. a photo taken in public) to the model, the model will fail to recognize you.

Folks Activity?

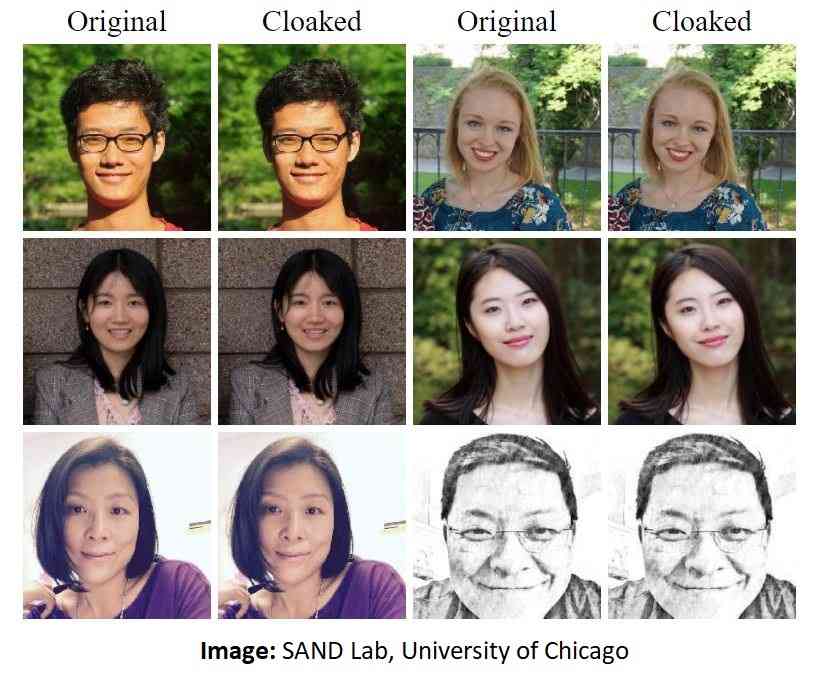

- Below these are the example images of a real and cloaked images Using the Fawkes Tool which not much difference.

- Even we can cloak our personal images for privacy from the user-friendly versions of Fawkes for use on Mac and Windows platforms.

- If any facial recognition tool tries to analyze the picture, it won’t be able to do match it with any picture from its database.

- Even Sand Lab officials are working to build an android and IOS Mobile application which will be easier to use, thus it is intentionally difficult to tell cloaked images from the originals. Sand labs from Chicago are looking into adding small markers into the cloak as a way to help users identify cloaked photos.

- For more information, watch below video available in YouTube

Our Activity

- We have tried with One of India’s biggest cricket hero MS Dhoni image